Client Background

DeepMotion is a San Francisco-based tech company that delivers AI-driven motion capture and animation tools. Designed for developers, animators, and virtual influencers, its core product Animate 3D lets users generate motion capture animation from regular video. The platform is recognized as a pioneering AI-Based VFX Tool, replacing traditional mocap suits and sensors with browser-based automation. It exemplifies the growing impact of artificial intelligence in visual effects, especially in real-time and virtual production workflows.

Problem Statement

A metaverse startup needed to animate realistic avatars for their virtual social app. Their characters had to walk, gesture, and emote in real time — but they had no access to mocap suits or dedicated animation teams. They required an AI-Based VFX Tool that could provide scalable, AI-generated motion from video to populate dynamic scenes with lifelike character movement. The challenge highlighted a broader need for AI powered VFX solutions that eliminate traditional production barriers.

Objective

To enable realistic avatar animation from simple video uploads using AI for film VFX and interactive environments — reducing production time and enabling non-technical creators to contribute animated content using intuitive AI VFX tools.

AI Integration Approach

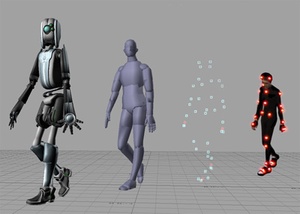

DeepMotion uses advanced machine learning VFX techniques, including pose estimation, kinematic tracking, and physics-based animation, to extract 3D skeletal motion from 2D videos. Key features of this AI-Based VFX Tool include:

- 2D-to-3D Motion Capture: Converts flat video into 3D joint animation in real time.

- Markerless Mocap: No need for suits, dots, or special backgrounds.

- Physics Layer: Adds realistic movement by simulating weight and balance.

- Foot Locking & Grounding: Ensures characters walk and move naturally with real-world constraints.

- Facial Tracking (Beta): Supports head and limited facial motion for added expressiveness.

The platform reflects significant innovation in AI in VFX industry workflows and supports output in FBX and BVH formats for use in game engines, animation software, and XR platforms.

Implementation Strategy

The startup recorded basic movements—walking, sitting, waving, dancing—using smartphones. They uploaded these clips to DeepMotion’s cloud-based AI-Based VFX Tool, Animate 3D, and followed this workflow:

- Uploaded raw footage to the DeepMotion dashboard.

- Selected animation preferences (e.g., physics on/off, hand tracking).

- Let the AI process the videos and return fully-rigged 3D animation data.

- Imported the results into Unity to attach animations to their avatar rigs.

- Fine-tuned animation in Unity using timeline controls and animator overrides.

This AI powered VFX solution enabled them to animate 50+ unique character actions in under a week — showcasing how AI VFX tools can transform real-time production pipelines.

Results

- 4X Production Speed: Reduced animation development time by over 75%.

- Increased Realism: Physics-based motion created believable avatar interaction.

- Zero Hardware Dependency: No suits, markers, or cameras needed—just videos.

- Creator-Friendly Workflow: Allowed even non-animators to contribute mocap data.

Impact on the Industry

DeepMotion is reshaping how motion capture is performed in virtual and interactive spaces. As an advanced AI-Based VFX Tool, it empowers indie game studios, metaverse platforms, and virtual influencers to achieve lifelike animation without traditional infrastructure. The software’s application of artificial intelligence in visual effects also positions it at the forefront of the AI in VFX industry, promoting accessibility, speed, and creativity.

Challenges Faced

- Limited Full-Body Interaction: Currently optimized for one person per video.

- Occlusion Issues: Hidden limbs or fast movement can affect tracking quality.

- Facial Mocap Still Evolving: While head tracking is available, facial expression capture is still limited — an area where emerging machine learning VFX techniques are being tested.

Future Scope

DeepMotion’s roadmap includes:

- Full-body multi-character mocap from group videos.

- AI-driven emotion tagging for contextual character reactions.

- Enhanced lip-sync and facial expression capture with voice integration.

These upgrades aim to make DeepMotion a comprehensive, real-time AI-Based VFX Tool for immersive digital storytelling — integrating even more powerful AI VFX tools and broadening the impact of AI for film VFX and games.

Key Takeaways

- DeepMotion delivers markerless motion capture from video with built-in physics realism.

- It enables faster, more economical animation production across industries.

- As an AI-Based VFX Tool, it supports metaverse avatars, indie games, and real-time systems.

- It exemplifies the potential of artificial intelligence in visual effects to remove hardware barriers and democratize motion capture.

- The platform is a notable innovation in the AI in VFX industry, applying machine learning VFX techniques to streamline high-quality animation.